Computers didn't even existed 50 years ago. Well... there were some around, but let's say they were a toy for scientist rather than machines of mass production. However, they existed. The first concepts of software development were put in place. The first paradigms of software development were defined.

In late 1950 Lisp was developed by MIT as the first functional programming language. It was the only programming paradigm that could be used. All computers, few that were, were programmed using functional programming.

Twenty years later structural programming started to gain traction by support from IBM. Languages like B, C, Pascal started to emerge. Let's consider this the first revolution in software development. We started with functional programming, and then we got structural programming, something totally different. It was groundbreaking and it took about 20 years to emerge. While this seems a long time now, it was what? Less than half the rate of industrial revolution that tends to happen every 50 years.

The fast pace of evolution in software continued exponentially. It was about, or even less than, ten years later when Smalltalk was made public for wide audience in August 1981. Developed by Xerox PARC, it was the next big thing in computer science.

While some other paradigms came along in the upcoming years, these three remained the only ones with wide adoption.

But what about hardware? How far did we come on hardware?

How many of you can remember the very moment when you interacted with a computer for the first time? Let your memory bring back that moment. Remember what you did, who were you with... A friend? Maybe your parents? Maybe a salesmen trying to convince your parent to by a computer? Doesn't matter. Remember that very moment. Remember that computer. Remember the screen. How many color did it have? Was a green-on-black text console, or a high-resolution CRT, or a FullHD widescreen? What about the keyboard? The mouse ... if invented at that time. What about the smell of the place? What about the sound of the machine?

Was it magical? Was it stressful? Was it joyful?

I remember... It was about 30 years ago. My father has taken me to the local computer center, his workplace. Yes, he is a software developer, one of the first generations in my country (Romania). We played. It was a kind of Pong game. On a black background, two green lines lit up at each side.

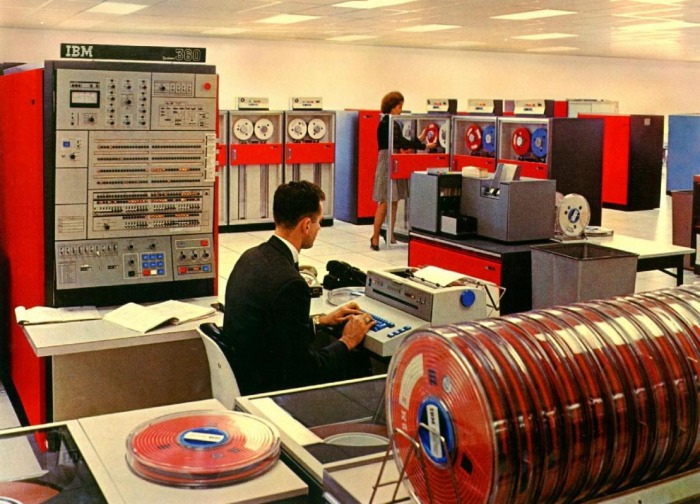

It looked similar to this image, though this seems to be highly detailed graphics compared to the image of my memories. And it was running on something like this.

Well, it wasn't this particular computer. Nor even IBM. It was a copy of capitalist technology developed as a proud product of a communist regime. It was a Romanian computer, a Felix.

The Felix was a very small computer compared to its predecessor. It could easily fit into a single large room, maybe 30-40 square meters. And it even had a terminal where you could see your code. Why was this such a big revolution? It's a screen and a keyboard after all. Yes, but your code went directly on magnetic tape, and then, in just a couple of hours you could run your program. That if you made no typos.

Before the magnetic tape and console revolution, there were punch cards and printers. Programmers wrote their code on millimetric paper, usually in Fortran or other functional languages.

Then someone else, at a punchcard station typed in all the code. Please note, the person transcribing you handwriting into computer language had little computer or software knowledge. It was a totally different job. Software developers used paper and pencil, not keyboard and mouse. They were not even allowed to approach the computer.

The result was a big stack of punch cards like this.

Then these cards were loaded into the mainframe, by a computer technician.

Overnight, the mainframe, the size of a whole floor, requiring several dedicated power connection directly from the high-power power grid, processed all the information and printed the result on paper.

The next day, the programmer read the output, understood the result. If there was an error, a bug, a typo, the whole stack had to be retyped because punch cards were sequential. If you were lucky, you could find a fix that effected only a small amount of cards and a fix that required the exact same amount of characters to work with the exact same region of memory.

In other words, it took a day or more to integrate the written software with the rest of the pieces and compile something useful. Magnetic tape reduced that to a few hours. Harddisks and more powerful processes in the '90s reduced that further to tens of minutes.

I remember when I installed my first Linux operating system. I had an Intel Celeron 2 processor. It was Slackware linux, and I had to compile its kernel at install time. It took the computer a few hours to finish. A whole operating system kernel. That was amazing. I could let it work in the evening and I had it compiled in the morning. Of course I broke the whole process a few times, and it took me about 2 weeks to set it up. It seemed so fast back then.

I work at Syneto. Our software product is an operating system for enterprise storage devices. That means kernel, a set of user space tools, several programming languages, and hour management software running on top of all these. We do not only have to integrate the pieces of the kernel to work together, but we have to integrate the C compiler, PHP, Python, a package manager, an installer, about two dozen CLI tools, about 100 system services, and all the management software into a single entity that works as a whole and which is more than the sum of its parts.

We can go from zero to hero in about an hour. That means to compile everything from source code. From kernel to Midnight Commander, from Python to PHP. We even compile the C compiler we use.

But most of the time we don't have to do this. This is an absolute overkill and waste of computing resources. We usually have most of the system already compiled, and we recompile only the bits and pieces we recently changed.

When a software developer changes the code, it is saved on a server. Another server periodically checks the source code. When it detects that something has changed, it recompiles that little piece of application or module. Then it saves its result to another computer which publishes this update. Than another computer does an update so that the developer can see the result.

What is amazing in this schema is how little software development changed, and how much everything else around software developers have changed. We eliminated the technicians typing in the handwritten code ... we are now allowed to use a keyboard. We eliminated the technician loading the punch cards into the server, we just send it over the network. We eliminated the delivery guy going with the disc to the customer ... we use the Internet. We eliminated the support guy installing the software ... we do automatic updates.

All these tools, networks, servers, computers, eliminated a lot of jobs except one, the software developer. Will we became obsolete in the future? Maybe, but I wouldn't start looking for another carrier just yet. In fact we will need to write even more software. Nowadays everything uses software. Your car may very well have over 100 million lines of software in it. Software controls the World and the number of programmers doubles every 5 years. We are so many, producing so much code, that reliance on automated and ever more complex systems will be higher and higher.

Five years ago Continuous Delivery (or Continuous Deployment) was a myth, a dream. Fifteen years ago Continuous Integrations was a joke! We ware doing Waterfall. Management was controlling the process. Why would you integrate continuously, you do that only once, at the end of the development cycle!

Agile Software Development changed our industry considerably. It communicated in a way that business could understand it. And most business embraced it, at least partially. What remained lagging behind were the tools and technical practices. And in many ways, they are still light years away in maturity compared to organziational practices like Scrum, Lean, Sprints, etc.

TDD, refactoring, etc, are barely getting noticed, far from mainstream. And it is even older than Agile! Continuous Integration and Continuous Delivery systems are, however, getting noticed. Their big advantage over software technologies is that business can relate to them. We, the programmers, can say: "Hey, you wanted us doing Scrum. You want us deliver. You will need an automated system to do that. We need the tools to deliver you the business value you require from us at the end of each iteration."

Technical practices are hard to quantify economically. At least immediately or tangibly. Yeah, yeah... We can argue about the quality of code, and legacy code, and technical debt. But they are just too abstract for most business to relate to them in any sensible manner.

But CI and CD? Oh man! They are gold! How many companies deliver software over the web as webpages? How many deliver software to mobile phone? The smartphone boom virtually opened the road ... the highway ... for continuous delivery!

Trends for "Smartphone"

Trends for "Continuous delivery"

Trends for "Continuous deployment"

It is fascinating to observer how the smartphone and CD trends tipped in 2011. The smartphone business embraced these technologies almost instantaneously. However CI technology was unaffected by the rise of smartphones.

Trends for "Continuous Integration"

So what tipped CI? There is no Google Trends data later than 2004. In my opinion the gradual adoption of the Agile Practices tipped CI.

Trends for "Agile software development"

The trends have the same growth. They go hand-in-hand.

Continuous deployment and delivery will soon overtake CI. They are getting mature and they will continue to grow. Will CI have to catch up with them? Probably.

Continuous integration is about taking the pieces of a larger software, putting them together, and making sure nothing brakes. In a sense CI masks under a business value your technical practices. You need tests to be run by the CI server. Very well you could write them first. You can do TDD and the business will understand it. Same goes for other techniques.

Continuous deployment means that after your software is compiled, an update will be available on your servers. Then the client's operating system (ie. Windows) will have a small pop-up saying there are updates.

Continuous delivery means that after the previous two processes are done, the solution is delivered directly to the client. Such an example would be Gmail web page. Do you remember it sometimes saying that Gmail was update and you should do a refresh? Or the applications on your mobile phone. They are updating automatically by default. One day you may have a version, next day a new one, and so on, without any user intervention.

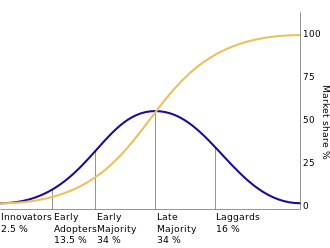

Agile is rising. It is starting to become mainstream. It is getting out of the early adopters category.

Follow the blue line in the Law of Diffusion graph above. Agile is in the early adopters stage. But it will soon rise into the majority section. When that happens we will write even more software, faster, better. We will need more performant CI servers, tools, and architectures. There are hard times ahead of us.

So where to go with CI from now on?

Integration times went down dramatically in past 30 years. From 3 days, to 3 hours, to 30 minutes, to 3 minutes. Five years ago I worked on a project that had a result a 100MB ISO image. From source to update took about 30 minutes. Today we have a 700MB ISO, and it takes 3 minutes. That's a 21x increase only in the past 5 years. I expect this trend to continue to rise in an exponential way.

In the next five years build times will shrink. Smaller projects will achieve true continuity in integration. You will be able to see the changes you make to a project almost instantaneously. The whole cycle described above will be in the order of 3-15 seconds.

At the same time the complexity of the projects will rise. We will write more and more complex software. We will compile more and more source code. We will need to find ways to integrate these complex systems. I expect a hard time for the CI tools. They will need to find a balance between high configurability and ease of use. They must be simple to be used by everyone, seamless, and require interaction only when something goes wrong.

What about hardware? Processing power is starting to hit its limits. Parallel processing is rising and seems to be de only way to go. We can't make processors faster, but we can throw a bunch of them into a single server.

Another issue with hardware is how fast can you write all that data to the disks. Fortunately for us SSDs are starting to take over HDDs for everyday data storage. Archiving seems to be going to rotating disks for the next 5 years, but we are hitting the limits of the physical material there as well. And yes... humanities digital data grows at an alarming rate. In 2013, the digital universe was 4.4 zettabytes. That is 4.4 billion terabytes! By 2020 it is estimated to be 10 times more, 44 zettabytes. And each person on the planet will generate on average 1.5 MB of data every second. Let's say we are 7 billion, that is 10.5 billion MB of new data every second. 605 billion MB every minute. 6050 billion MB every hour. Or in other words 6 billion GB every hour. That is about 0.114 zettabytes each day.

It is estimated that in 2020 alone we will produce another 40 zettabytes of data, effectively doubling the enormous quantities we already produced. The trick with the growth of the digital universe is that it grows exponentially, not linearly. It is like an epidemic. It doubles at ever faster rates.

And all that data will have to be managed by software you and I write. Software that will have to be so good, so performant, so reliable, that all that data will be in perfect safety. And to produce software like that we will need tools like CI and CD architectures that are capable of managing enormous quantities of source code.

What about AI? There were some great strides in artificial intelligence lately. We went from basically nothing to a great Go player. But that is still far from real intelligence. However, the first sign of AI application in CI were prototyped recently. MIT released a prototype software analysis and repair AI in mid 2015. It actually found and fixed bugs some pretty complex open source projects. So there is a chance that by 2020 we will get at least some smart code analyses AIs that will be able to find bugs in our software.

If you are curious about more on this topic, or simply want to share your view, I invite you to my keynote speech at DevTalks Bucharest / Romania, on Jun 9th 2016. As always I will be open to discuss this and other IT, software, hardware topics throughout the event. Just ping me on twitter if your are around.

Thanks for posting. It's really good article.

ReplyDeleteDevOps Training

DevOps Online Training